Table Of Content

- What is Marble A Multimodal?

- Marble A Multimodal Overview

- Inputs and quick generation

- Workflow and spatial understanding

- Editing, composition, and export

- Marble A Multimodal feature table

- Key features

- How to use Marble A Multimodal - step by step

- Create a world from a text prompt

- Use images, video, or 3D inputs

- Refine, expand, and export

- Editing in practice

- Targeted edits with Panoedit

- Building larger, traversable areas

- Visual quality and technical notes

- Gaussian splats and triangle meshes

- Video input and motion detail

- My test run and observations

- Prompt used and result

- Strengths and gaps I noticed

- Example scenarios from the session

- Practical tips for better results

- Export and integration

- Where Marble A Multimodal fits today

- Conclusion

Marble by World Labs: AI That Builds 3D Worlds from Anything

Table Of Content

- What is Marble A Multimodal?

- Marble A Multimodal Overview

- Inputs and quick generation

- Workflow and spatial understanding

- Editing, composition, and export

- Marble A Multimodal feature table

- Key features

- How to use Marble A Multimodal - step by step

- Create a world from a text prompt

- Use images, video, or 3D inputs

- Refine, expand, and export

- Editing in practice

- Targeted edits with Panoedit

- Building larger, traversable areas

- Visual quality and technical notes

- Gaussian splats and triangle meshes

- Video input and motion detail

- My test run and observations

- Prompt used and result

- Strengths and gaps I noticed

- Example scenarios from the session

- Practical tips for better results

- Export and integration

- Where Marble A Multimodal fits today

- Conclusion

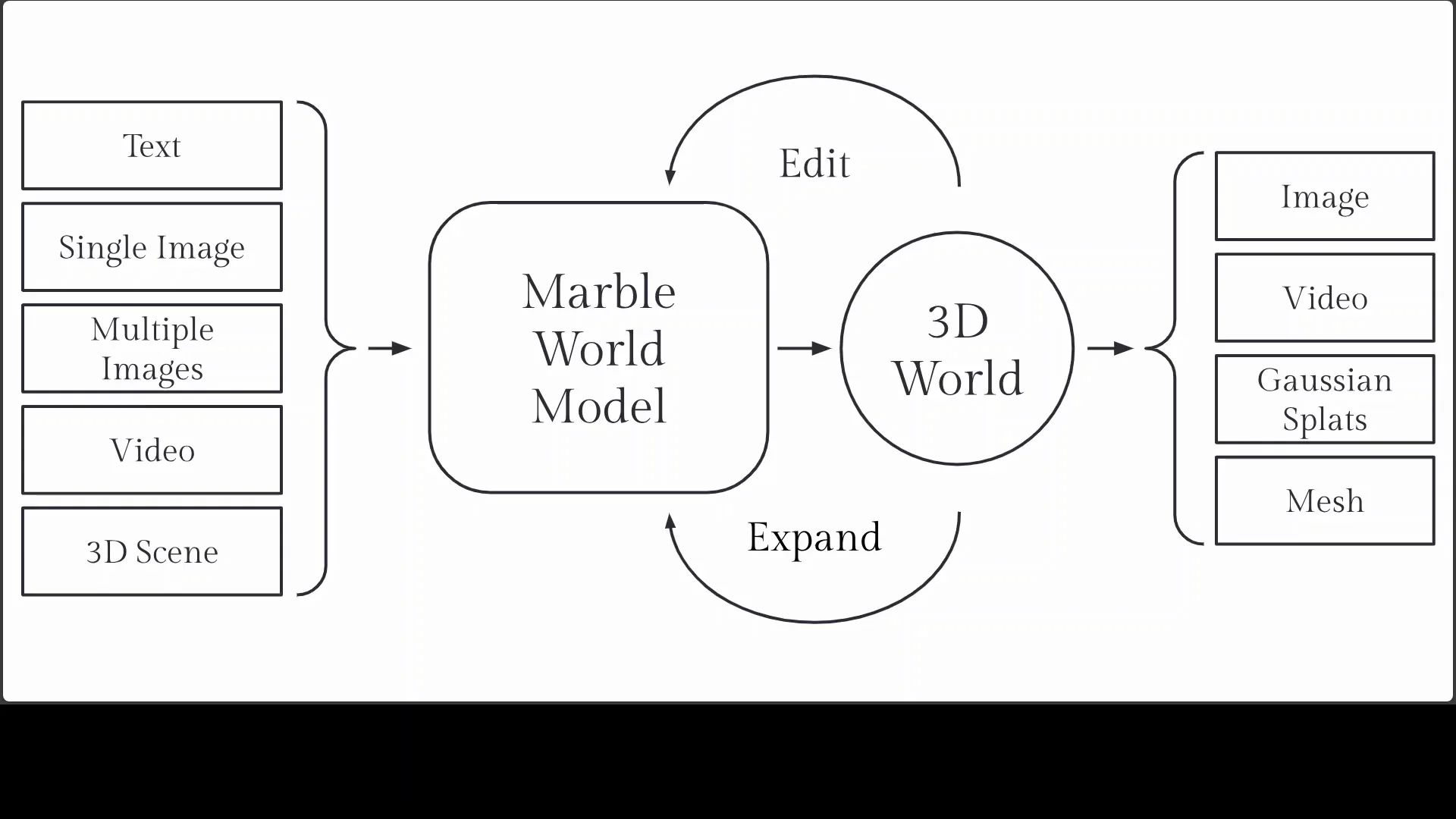

Marble is a platform from World Labs that creates high fidelity, persistent 3D worlds from simple inputs. It focuses on spatial intelligence, turning text, images, video, or coarse 3D structure into explorable environments within minutes. The result is a fast path from an idea to a navigable scene that can be edited, expanded, and exported for web, VR, AR, and game engines.

In this article, I walk through what Marble A Multimodal is, how it works, how to use it, and what I observed while creating a world. The goal is to give you a clear understanding of its workflow, editing tools, export options, and current strengths and gaps.

What is Marble A Multimodal?

Marble A Multimodal is a world model and creation studio that interprets multi-format inputs and builds coherent 3D spaces. You can start from a single text prompt, several images, short video clips, or a basic 3D layout. The system constructs a full environment, complete with spatial structure, surfaces, lighting, and scene composition.

The platform is designed for accessibility. You can produce explorable 3D spaces without deep experience in 3D modeling. After the first pass, you can refine the scene with targeted edits or scale it into larger traversable areas. You can also compose multiple worlds into a single environment and record cinematic shots.

Marble A Multimodal Overview

Marble combines input interpretation, scene generation, editing, and export in one workflow. It supports quick world creation for hobby projects and more complex pipelines for professional use.

Inputs and quick generation

You can start with:

- Text prompts

- Sets of images

- Video clips

- Coarse 3D structure, models, and primitives

Generation runs in minutes. Some input options may require an upgraded plan. The platform queues your job, builds the environment, and presents an explorable scene you can review and edit.

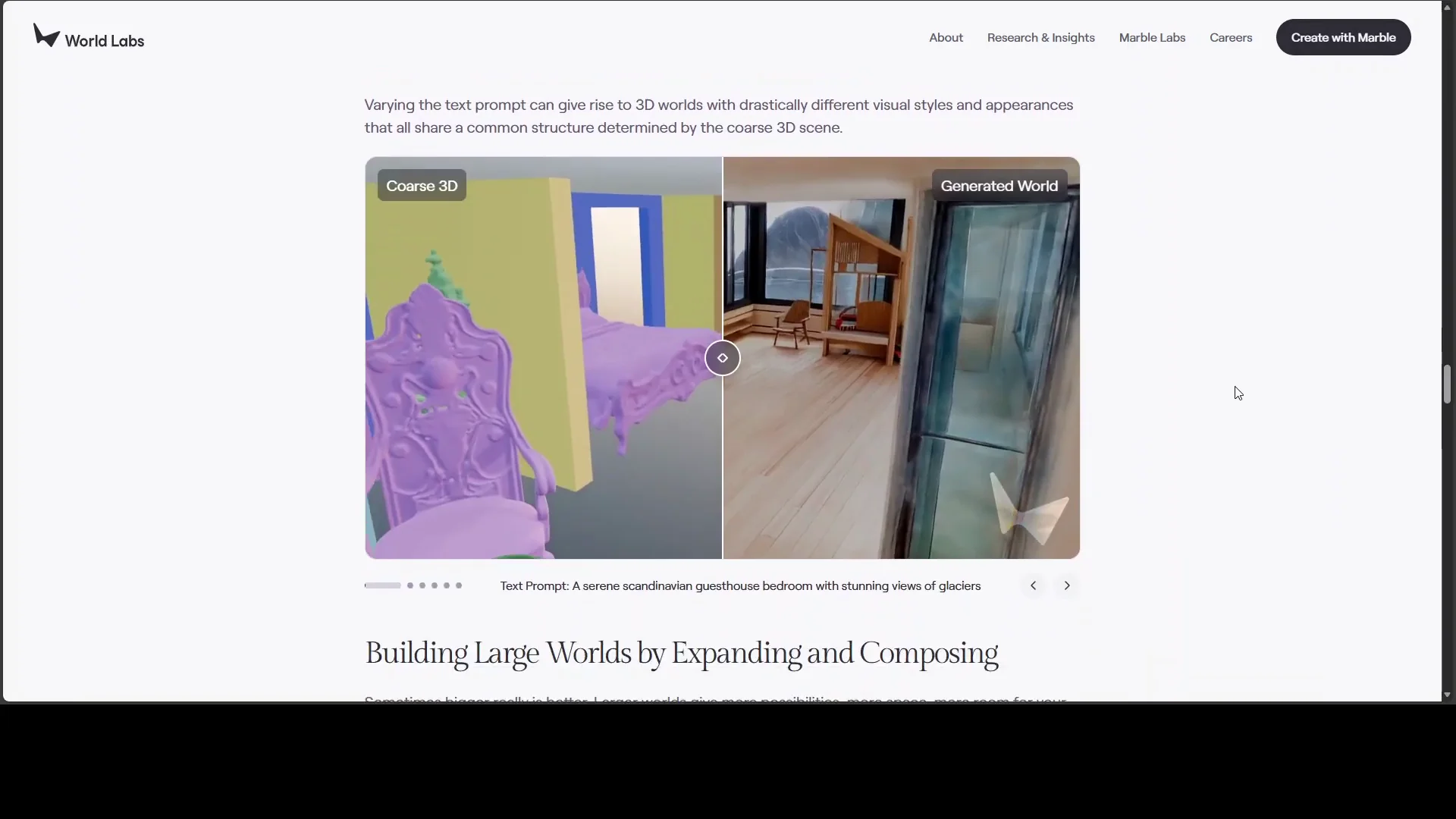

Workflow and spatial understanding

The core workflow begins with AI interpretation of your input. It analyzes spatial relationships, materials, and lighting cues to infer a coherent layout. That spatial understanding drives the 3D structure, camera paths, and environment details that make the world feel consistent.

After generation, the scene is editable. You can adjust specific regions, change materials, modify lighting, or expand the environment beyond the initial boundaries.

Editing, composition, and export

Marble includes:

- Panoedit for targeted modifications

- A studio to compose multiple worlds

- Tools for creating cinematic recordings

- Export options for web, VR, AR, and game engines

This makes it practical for quick previews and for building assets that fit into larger workflows.

Marble A Multimodal feature table

| Capability | What it does | Notes |

|---|---|---|

| Text-to-world | Builds a 3D world from a single prompt | Fast path from idea to scene |

| Multi-image input | Fuses several images into a coherent space | Produces consistent materials and lighting |

| Video input | Converts short clips into 3D scenes | Preserves motion cues and improves detail |

| 3D coarse structure | Uses basic geometry as a scaffold | Accepts models and primitives |

| Panoedit | Targeted edits to specific regions | Adjust structure, materials, or objects |

| World composition | Combines multiple worlds into one scene | Useful for large areas |

| Cinematic tools | Records planned camera moves | Good for previews and reels |

| Export formats | Web, VR, AR, game engines | Flexible integration with pipelines |

| Larger traversable areas | Expands scenes beyond the initial view | Good for connected spaces |

| Mesh and splat support | Works with triangle meshes and Gaussian splats | Enables different reconstruction methods |

Key features

- Multi-input generation from text, images, video, or coarse 3D structure

- Fast world creation with coherent spatial layout and lighting

- Targeted editing with Panoedit for local changes

- Composition of multiple worlds for expanded environments

- Cinematic recording tools for polished previews

- Export for web, VR, AR, and game engines

- Support for triangle meshes and Gaussian splats

- Option to grow scenes into larger traversable areas

- Good results with multi-image prompts

- Improved motion detail from video input, with water and flame effects that read well

How to use Marble A Multimodal - step by step

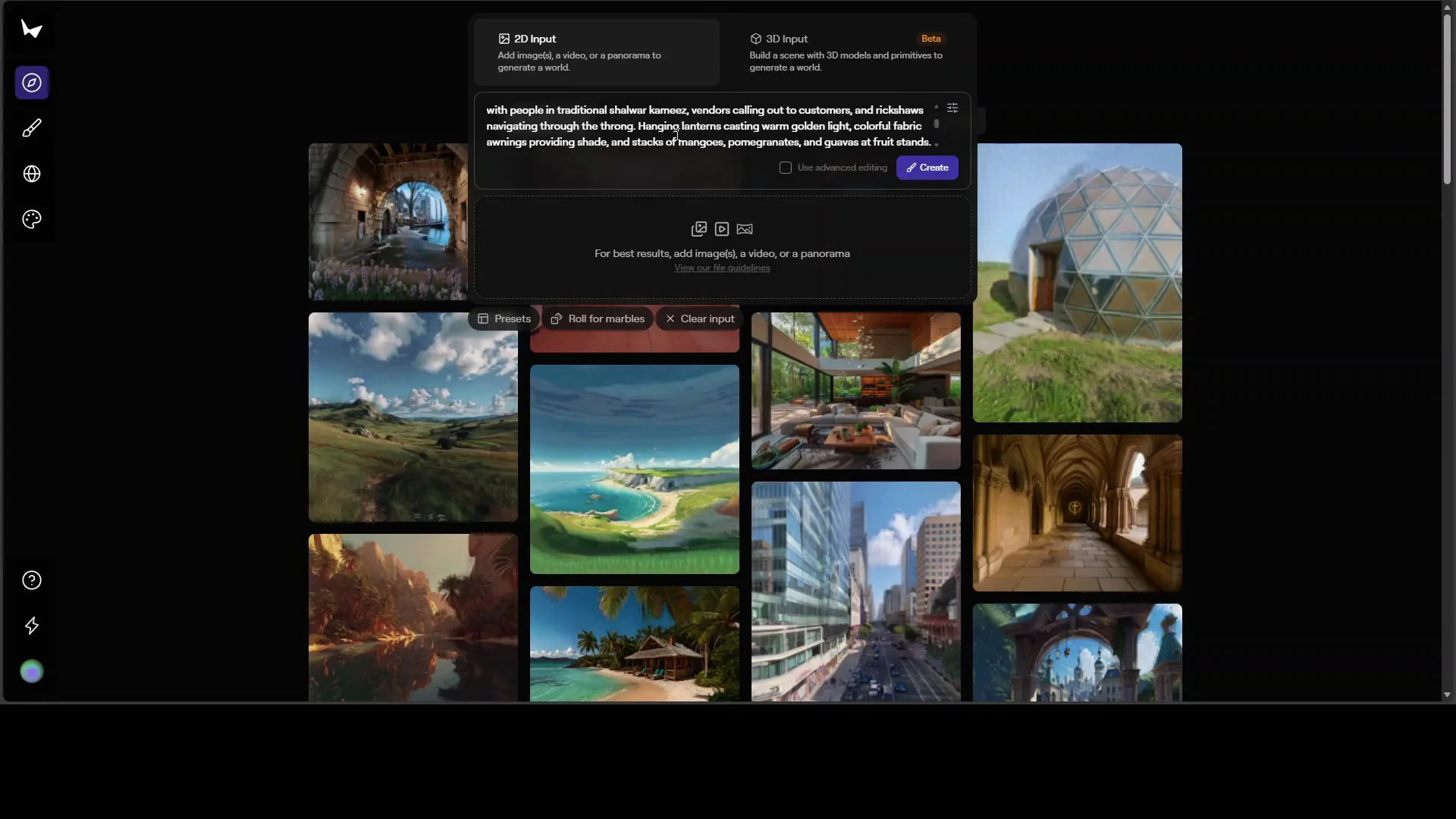

The platform is available at marble.worldlabs.ai. I outline the typical process below, including the steps I followed during my test.

Create a world from a text prompt

- Sign in with your preferred account.

- Choose the creation mode. Text is the simplest starting point.

- Enter a clear, specific prompt that describes the scene, mood, and materials.

- Select input options and any available presets or defaults.

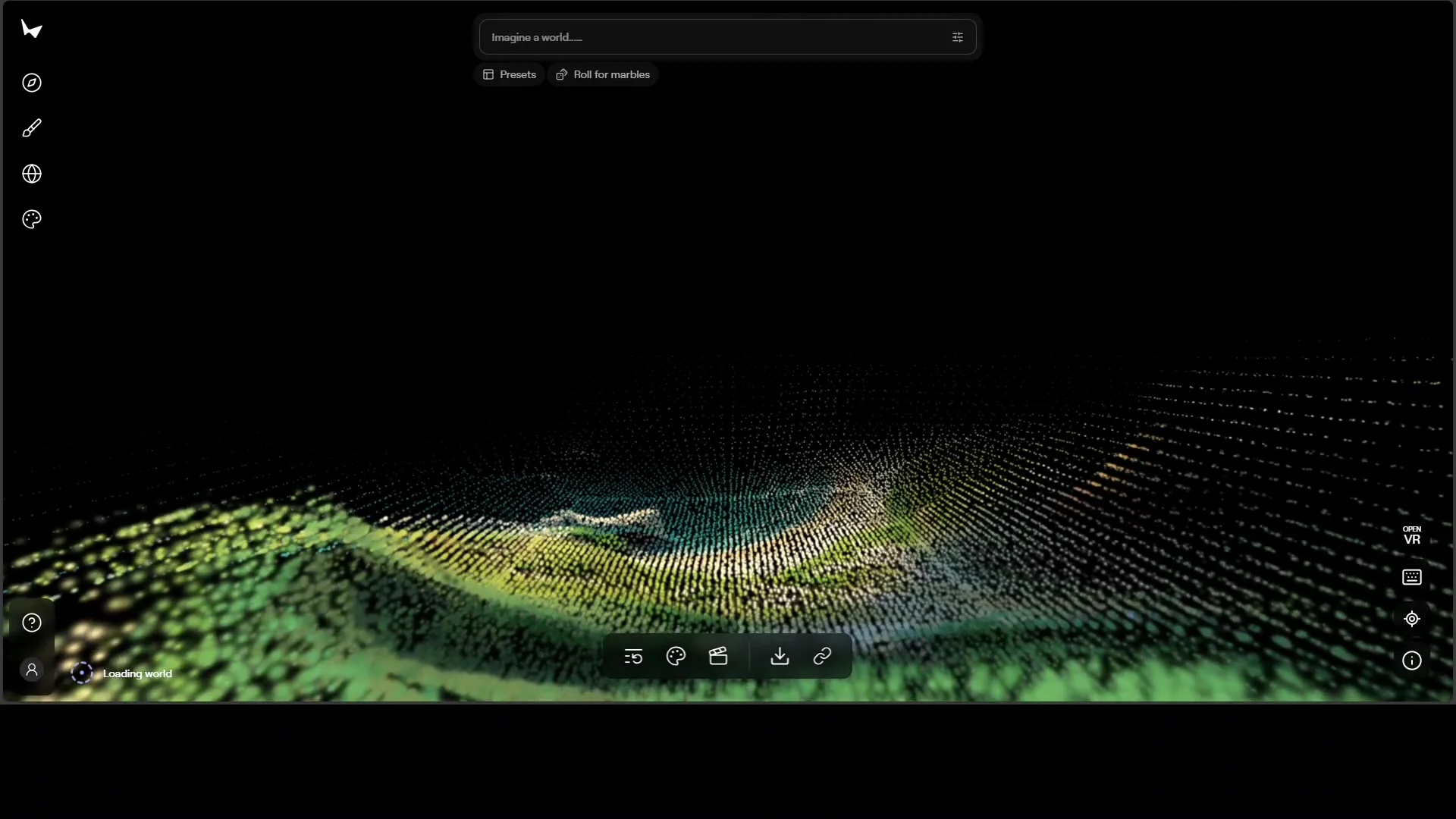

- Click Create. The job will queue and process for a few minutes.

- Open the generated world and scrub through the preview to inspect the result.

Tips:

- Include structural cues in the prompt, such as layout, objects, and materials.

- Add lighting preferences if they matter for the scene.

- Keep an eye on the queue status if it is busy.

Use images, video, or 3D inputs

- Upload multiple images if you want the system to infer structure from references.

- For video input, provide a short clip of the area you want reconstructed.

- For 3D, bring a coarse layout or primitives to guide geometry and composition.

- Confirm the input settings, as some options may require an upgraded plan.

- Generate the scene and compare it with your source references.

Tips:

- Multi-image sets often produce strong results with consistent materials.

- Short videos can add motion and surface detail that single frames miss.

- Coarse structure helps fix the layout when accuracy matters.

Refine, expand, and export

- Use Panoedit to target regions that need adjustments.

- Modify materials, objects, and lighting to match your intent.

- Expand the scene to create larger traversable areas if needed.

- Compose multiple worlds in the studio to build connected spaces.

- Record cinematic paths for a clean showcase of the result.

- Export for the web, VR, AR, or a game engine, based on your workflow.

Tips:

- Iterate edits in small passes to keep control of the result.

- Save copies before large changes so you can compare versions.

- Confirm export format and scale for downstream tools.

Editing in practice

Marble’s editing tools are built for focused changes without reconstructing the whole scene. This is especially useful for aligning the world with an art direction or a functional need.

Targeted edits with Panoedit

Panoedit supports:

- Structural tweaks to reposition or replace objects

- Material changes, such as switching counters or surfaces

- Lighting adjustments for mood and clarity

In practice, I saw edits that replaced tables with low benches, adjusted scene layout, and swapped kitchen surfaces to black granite. These edits held the spatial logic of the room and kept continuity with the rest of the scene.

Building larger, traversable areas

After the initial world is generated, you can push beyond the boundaries. The platform can grow the environment from a small area into a more extensive space. This is useful for:

- Connecting rooms

- Extending streets or corridors

- Building continuous exploration paths

The resulting areas feel coherent when expanded carefully, preserving materials and lighting across sections.

Visual quality and technical notes

Marble focuses on spatial intelligence. It reads cues from input to build an environment that looks coherent and navigable. The system supports different reconstruction methods and improves details from motion cues in video.

Gaussian splats and triangle meshes

The platform can work with Gaussian splats and triangle meshes. In practice:

- Splats can represent surfaces from sparse views

- Meshes provide a solid foundation for geometry and collision

- Meshes can be built on top of splats to combine strengths

This hybrid approach supports both speed and structure. It helps preserve soft surfaces and fine detail while delivering usable geometry for editing and export.

Video input and motion detail

Video input adds temporal information that aids reconstruction. In the examples I reviewed:

- Flames read convincingly after enhancement

- Water had pleasing motion cues

- Overall physics read well for the intended scenes

Short clips with steady movement tend to provide enough information for improved surface and lighting inference.

My test run and observations

To see how the system behaves, I created a world from a text prompt. I kept the setup simple and used the default options to mirror a typical first session.

Prompt used and result

I prompted Marble to build a bustling Pakistani bazaar in Karachi with narrow winding lanes and colorful shops filled with textiles. The system generated a navigable scene and placed market elements across the space.

The result aligned with parts of my prompt, though some items lacked clarity. Text details were missing, and certain merchandise did not read as intended. Lighting and general layout felt coherent, but object quality varied.

Strengths and gaps I noticed

Strengths:

- Quick generation from a single prompt

- Coherent layout and lighting

- Easy world preview and navigation

- Editing tools for targeted fixes

- Strong multi-image and video-driven results in the examples

Gaps:

- Some assets lacked detail or accuracy

- Text elements were not present

- My free session did not match the quality of curated examples

- Prompts may require more careful phrasing for complex scenes

- Some options appear to be gated by an upgrade

These observations suggest that careful prompting and iterative edits are valuable. I also expect higher fidelity from paid tiers and from workflows that start with multi-image or 3D inputs.

Example scenarios from the session

The examples I reviewed illustrate what the platform can achieve across different inputs and edits.

-

Original vs edited scenes:

- Replace tables with low benches

- Change counters to black granite

- Adjust layout and lighting for mood and clarity

-

Generated worlds:

- Art museum with wooden flooring and colorful paintings

- Bedroom with a panoramic exterior view

- A large train composed of compartments with varied themes

-

Larger areas:

- Extended traversable spaces with consistent lighting and surface continuity

-

Video-driven improvements:

- Flames and water that read well after enhancement

- Physics that look convincing for the scene context

These cases show the range of edits and the benefits of structured input. Scenes improved with targeted adjustments and with sources that include rich visual cues.

Practical tips for better results

-

Start with a clear prompt:

- Name the location type, layout, materials, and lighting

- Include key objects and how they are arranged

-

Use structured input:

- Add multiple images for consistent materials and surfaces

- Provide a short video to capture motion and context

- Supply a coarse 3D layout to lock in structure

-

Iterate with edits:

- Fix local issues with Panoedit

- Expand the world gradually

- Compare versions before and after edits

-

Plan the export:

- Choose the output that matches your final platform

- Check scale, materials, and collision settings in downstream tools

- Record cinematic paths for a clean presentation

Export and integration

Marble supports export for:

- Web viewers

- VR and AR experiences

- Game engines and 3D software

This makes it suitable for interactive demos, previews, or asset pipelines. Pair it with your preferred engine to add interactivity, physics, or gameplay features beyond the initial scene.

Where Marble A Multimodal fits today

Marble shows strong potential for:

- Fast concept worlds from text

- Coherent spaces from multi-image sets

- Enhanced detail from short video clips

- Iterative edits for material and layout control

- Assembling larger connected areas

In my experience, the curated examples looked stronger than my first real-time generation. I expect the best results when the input is structured and when edits are applied thoughtfully. Upgraded plans may unlock modes that improve quality or speed.

Conclusion

Marble A Multimodal turns simple inputs into explorable 3D worlds with a focus on spatial intelligence. It reads structure, lighting, and materials from text, images, video, or basic 3D layouts and produces coherent scenes you can edit, expand, and export. The workflow supports beginners and professionals, and it fits into pipelines for web, VR, AR, and game engines.

My test run produced a usable world from a single prompt, with clear strengths in layout and lighting and room to improve object detail and text elements. The examples built from images and video showed strong results, and the editing tools made targeted refinements straightforward.

If you want a practical path from concept to a navigable scene, Marble A Multimodal offers a clear workflow:

- Start with a well-structured prompt or rich visual input

- Generate the scene and review it quickly

- Apply targeted edits with Panoedit

- Compose larger areas as needed

- Record and export for your destination platform

With careful input and iterative edits, you can shape coherent 3D environments that meet your goals and integrate with existing tools.

Related Posts

Chroma 4B: Exploring End-to-End Virtual Human Dialogue Models

Chroma 4B: Exploring End-to-End Virtual Human Dialogue Models

Qwen3-TTS: Create Custom Voices from Text Descriptions Easily

Qwen3-TTS: Create Custom Voices from Text Descriptions Easily

How to Fix Google AI Studio Failed To Generate Content Permission Denied?

How to Fix Google AI Studio Failed To Generate Content Permission Denied?