Table Of Content

- Introduction

- What is UMO?

- Table Overview of UMO

- Key Features of UMO

- 1. Multi-Identity Matching

- 2. Identity Preservation

- 3. Reinforcement Learning Integration

- 4. Scalable Dataset Support

- 5. Open-Source Commitment

- Step-by-Step Guide to Using UMO

- Step 1: Clone the Repository

- Step 2: Create a Virtual Environment (Optional but Recommended)

- For UMO with UNO:

- For UMO with OmniGen2:

- Step 3: Install Requirements

- 3.1 Install UNO Requirements

- 3.2 Install OmniGen2 Requirements

- 3.3 Install UMO Requirements

- Step 4: Download Checkpoints

- Step 5: Run the Gradio Demo

- UMO with UNO:

- UMO with OmniGen2:

- Step 6: Inference

- Inference on XVerseBench (Single Subject)

- Inference on XVerseBench (Multi Subject)

- Step 7: OmniContext Inference

- UNO-Based OmniContext

- OmniGen2-Based OmniContext

- Demo Examples

- UMO vs OmniGen2 Comparison

- Installation Section

- Tips and Best Practices

- Frequently Asked Questions (FAQs)

- Q1. What makes UMO different from UNO and USO?

- Q2. Can UMO handle both synthetic and real images?

- Q3. Is UMO free to use?

- Q4. What are the hardware requirements for running UMO?

- Q5. How do I fix unstable results with OmniContext?

- Conclusion

UMO by ByteDance: Scaling Multi-Identity Consistency for Image Customization via Matching Reward

Table Of Content

- Introduction

- What is UMO?

- Table Overview of UMO

- Key Features of UMO

- 1. Multi-Identity Matching

- 2. Identity Preservation

- 3. Reinforcement Learning Integration

- 4. Scalable Dataset Support

- 5. Open-Source Commitment

- Step-by-Step Guide to Using UMO

- Step 1: Clone the Repository

- Step 2: Create a Virtual Environment (Optional but Recommended)

- For UMO with UNO:

- For UMO with OmniGen2:

- Step 3: Install Requirements

- 3.1 Install UNO Requirements

- 3.2 Install OmniGen2 Requirements

- 3.3 Install UMO Requirements

- Step 4: Download Checkpoints

- Step 5: Run the Gradio Demo

- UMO with UNO:

- UMO with OmniGen2:

- Step 6: Inference

- Inference on XVerseBench (Single Subject)

- Inference on XVerseBench (Multi Subject)

- Step 7: OmniContext Inference

- UNO-Based OmniContext

- OmniGen2-Based OmniContext

- Demo Examples

- UMO vs OmniGen2 Comparison

- Installation Section

- Tips and Best Practices

- Frequently Asked Questions (FAQs)

- Q1. What makes UMO different from UNO and USO?

- Q2. Can UMO handle both synthetic and real images?

- Q3. Is UMO free to use?

- Q4. What are the hardware requirements for running UMO?

- Q5. How do I fix unstable results with OmniContext?

- Conclusion

Introduction

As someone deeply involved in the world of image customization, I’ve always been fascinated by the challenge of maintaining consistent identity when working with multiple images and subjects. This becomes even more difficult when trying to integrate different identities into a single output.

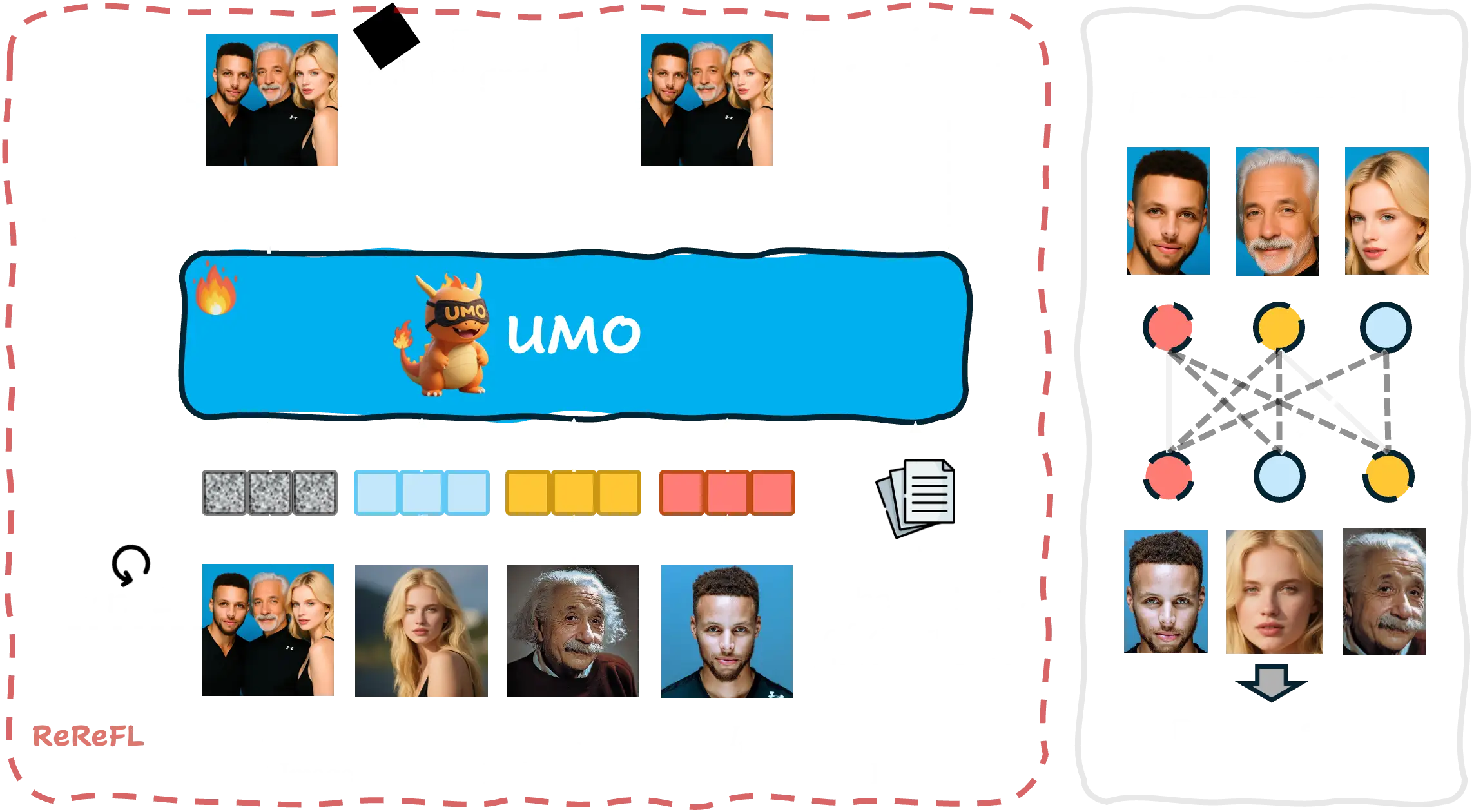

ByteDance recently announced UMO (Unified Multi-Identity Optimization framework), which directly tackles this problem. It is part of the UXO family, which includes UMO, USO, and UNO. UMO brings an innovative way to handle multi-identity customization, allowing us to combine one-to-many identities with any subjects across various scenarios while ensuring high identity consistency.

The project will be completely open-sourced, including:

- Inference scripts

- Model weights

- Training code

This makes UMO a powerful resource for both researchers and developers looking to build new image customization models.

What is UMO?

UMO is a framework designed to solve a major issue in image customization: maintaining identity consistency across multiple references. Humans are very sensitive to facial details, so even minor inconsistencies can ruin the result. Traditional models often struggle with identity confusion, especially when multiple reference images are involved.

UMO changes this by introducing a multi-to-multi matching paradigm, which reformulates multi-identity generation as a global assignment optimization problem. In simpler terms, UMO matches identities across multiple references and subjects using a reinforcement learning approach applied to diffusion models.

Here’s what makes UMO stand out:

- Maintains high-fidelity identity preservation

- Reduces identity confusion even with multiple references

- Works with scalable datasets containing both synthetic and real images

- Provides a new metric to measure and evaluate identity confusion

Table Overview of UMO

| Aspect | Details |

|---|---|

| Full Name | Unified Multi-Identity Optimization |

| Developer | ByteDance |

| Family | UXO (UMO, USO, UNO) |

| Primary Goal | High-consistency multi-identity image customization |

| Key Technique | Multi-to-multi matching using reinforcement learning |

| Open Source | Yes – includes scripts, weights, and training code |

| Core Use Cases | Multi-identity image generation, customization, research |

| Supported Models | UNO and OmniGen2 |

| Language | Python 3.11+ |

Key Features of UMO

Here are some of the most important features that make UMO valuable for developers, researchers, and creators:

1. Multi-Identity Matching

UMO excels at handling one-to-many identity scenarios, where multiple identities need to be accurately integrated into a single output. This is especially useful for projects involving group portraits or collaborative image generation.

2. Identity Preservation

By approaching the task as a global optimization problem, UMO keeps identity details consistent across all outputs. This solves a common issue where faces appear distorted or swapped between different frames or images.

3. Reinforcement Learning Integration

UMO applies reinforcement learning techniques to diffusion models, improving accuracy over time and ensuring the model learns to reduce identity confusion effectively.

4. Scalable Dataset Support

UMO includes a multi-reference image dataset, combining synthetic and real-world data, which helps it train more effectively for real-world use cases.

5. Open-Source Commitment

In line with ByteDance’s previous initiatives, UMO is fully open-sourced, empowering developers worldwide to experiment, improve, and build upon the framework.

Step-by-Step Guide to Using UMO

Here’s a clear, step-by-step guide to help you set up UMO and get it running on your system.

Step 1: Clone the Repository

You need to clone the UMO repository along with its submodules (UNO and OmniGen2).

git clone --recurse-submodules git@github.com:bytedance/UMO.git

cd UMOStep 2: Create a Virtual Environment (Optional but Recommended)

For UMO with UNO:

python3 -m venv venv/UMO_UNO

source venv/UMO_UNO/bin/activateFor UMO with OmniGen2:

python3 -m venv venv/UMO_OmniGen2

source venv/UMO_OmniGen2/bin/activateStep 3: Install Requirements

3.1 Install UNO Requirements

Follow the installation instructions for UNO:

3.2 Install OmniGen2 Requirements

Follow the installation instructions for OmniGen2:

OmniGen2 GitHub Installation Guide

3.3 Install UMO Requirements

pip install -r requirements.txtStep 4: Download Checkpoints

UMO requires model checkpoints, which can be downloaded using the Hugging Face CLI.

pip install huggingface_hub hf-transfer

export HF_HUB_ENABLE_HF_TRANSFER=1

repo_name="bytedance-research/UMO"

local_dir="models/"$repo_name

huggingface-cli download --resume-download $repo_name --local-dir $local_dirStep 5: Run the Gradio Demo

You can test UMO with a Gradio-based demo.

UMO with UNO:

python3 demo/UNO/app.py --lora_path models/bytedance-research/UMO/UMO_UNO.safetensorsUMO with OmniGen2:

python3 demo/OmniGen2/app.py --lora_path models/bytedance-research/UMO/UMO_OmniGen2.safetensorsStep 6: Inference

Inference on XVerseBench (Single Subject)

accelerate launch eval/UNO/inference_xversebench.py \

--eval_json_path projects/XVerse/eval/tools/XVerseBench_single.json \

--num_images_per_prompt 4 \

--width 768 \

--height 768 \

--save_path output/XVerseBench/single/UMO_UNO \

--lora_path models/bytedance-research/UMO/UMO_UNO.safetensorsInference on XVerseBench (Multi Subject)

accelerate launch eval/UNO/inference_xversebench.py \

--eval_json_path projects/XVerse/eval/tools/XVerseBench_multi.json \

--num_images_per_prompt 4 \

--width 768 \

--height 768 \

--save_path output/XVerseBench/multi/UMO_UNO \

--lora_path models/bytedance-research/UMO/UMO_UNO.safetensorsStep 7: OmniContext Inference

UNO-Based OmniContext

accelerate launch eval/UNO/inference_omnicontext.py \

--eval_json_path OmniGen2/OmniContext \

--width 768 \

--height 768 \

--save_path output/OmniContext/UMO_UNO \

--lora_path models/bytedance-research/UMO/UMO_UNO.safetensorsOmniGen2-Based OmniContext

accelerate launch -m eval.OmniGen2.inference_omnicontext \

--model_path OmniGen2/OmniGen2 \

--model_name UMO_OmniGen2 \

--test_data OmniGen2/OmniContext \

--result_dir output/OmniContext \

--num_images_per_prompt 1 \

--disable_align_res \

--lora_path models/bytedance-research/UMO/UMO_OmniGen2.safetensorsDemo Examples

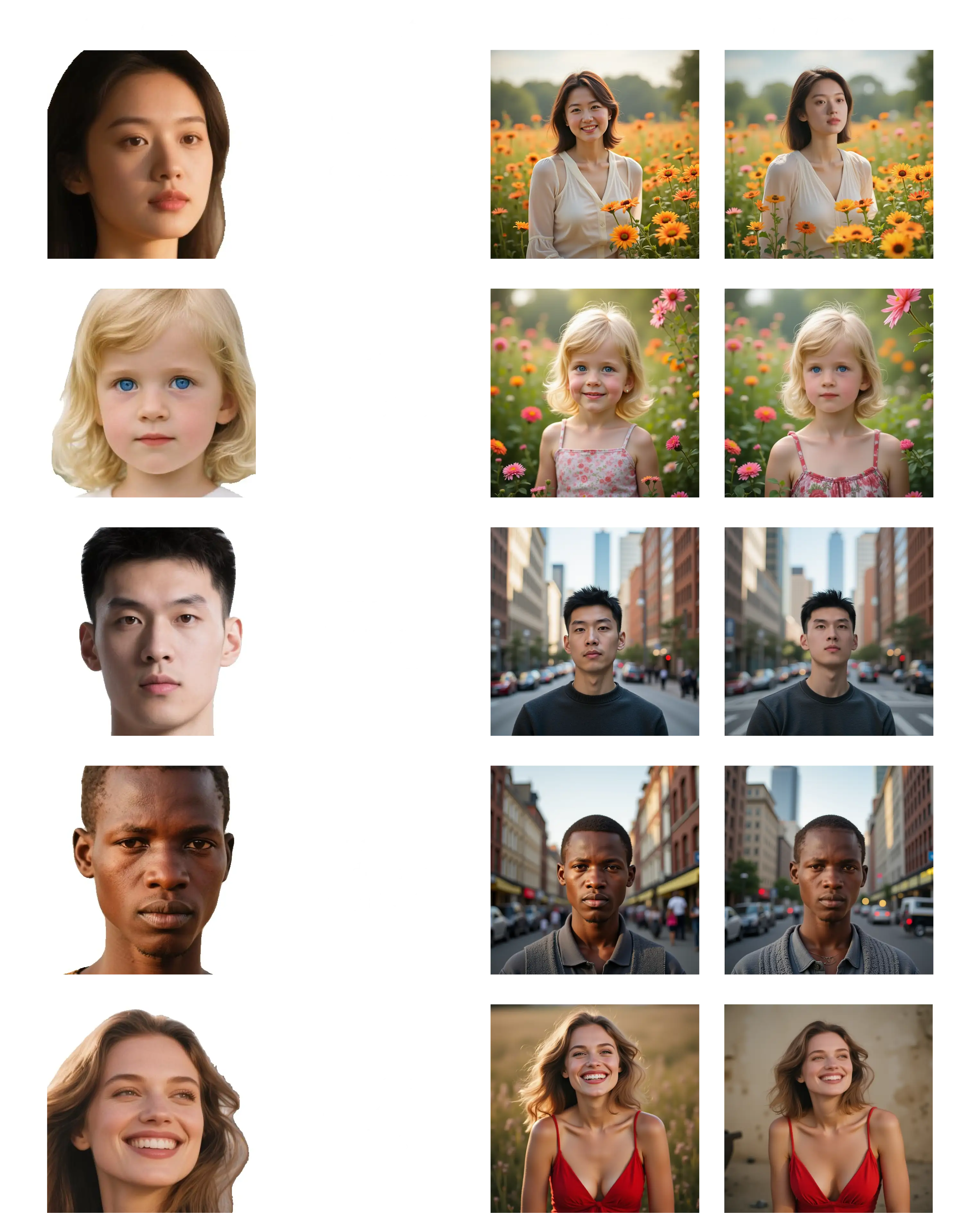

Here are some visual examples showcasing UMO's capabilities in multi-identity image customization:

The above example demonstrates UMO's ability to maintain consistent identity across multiple subjects while preserving high-quality details and natural appearance.

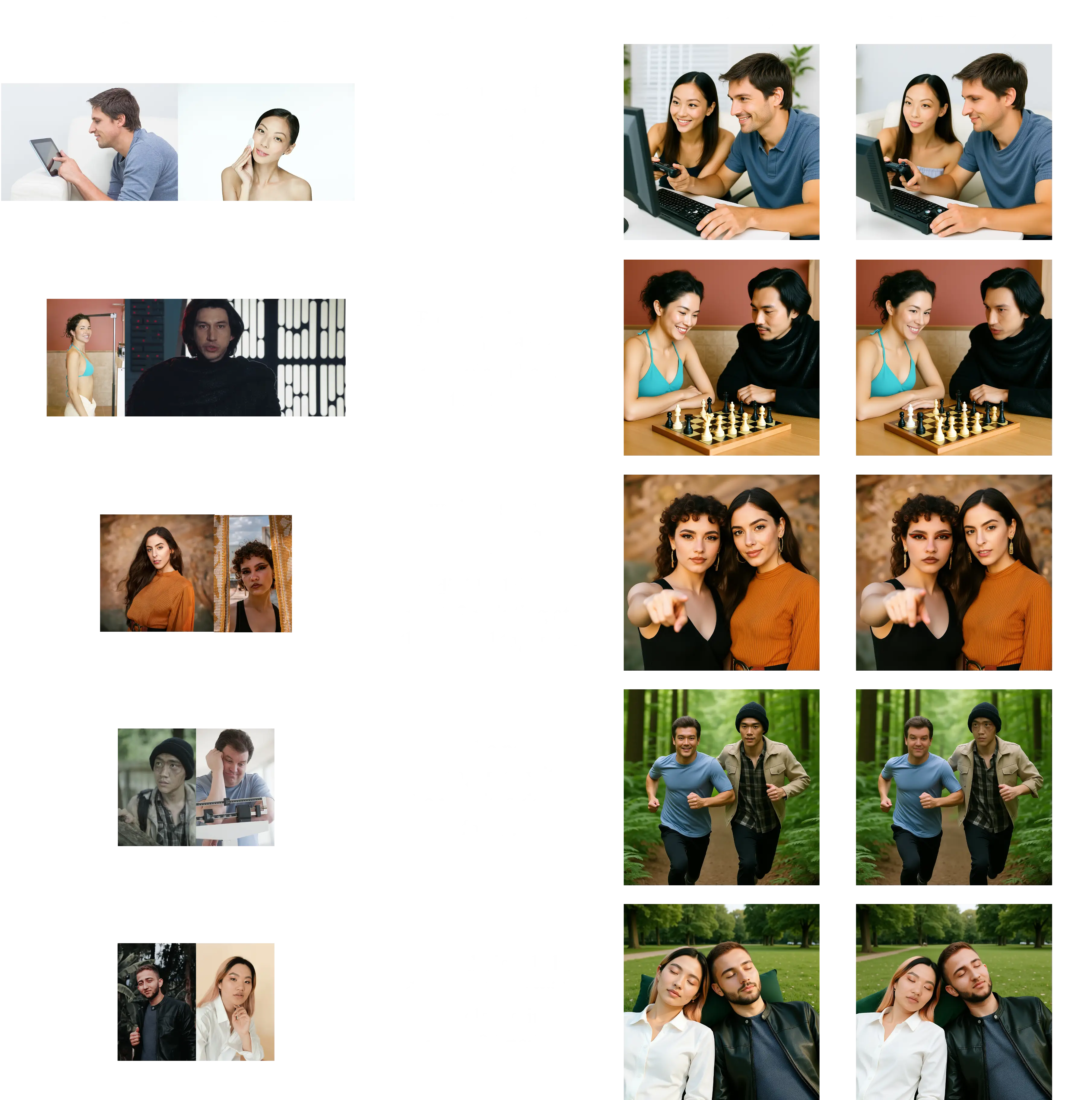

UMO vs OmniGen2 Comparison

This comparison shows how UMO improves upon OmniGen2 in terms of identity consistency and multi-subject handling. Notice the enhanced preservation of facial features and reduced identity confusion in the UMO results.

These examples highlight UMO's strength in:

- Multi-identity preservation across different subjects

- Consistent facial feature matching

- Reduced identity confusion compared to baseline models

- High-quality output with natural appearance

Installation Section

Here’s a simplified version of the full installation process:

| Step | Command |

|---|---|

| Clone Repository | git clone --recurse-submodules git@github.com:bytedance/UMO.git |

| Create Virtual Env (UNO) | python3 -m venv venv/UMO_UNO && source venv/UMO_UNO/bin/activate |

| Create Virtual Env (OmniGen2) | python3 -m venv venv/UMO_OmniGen2 && source venv/UMO_OmniGen2/bin/activate |

| Install Requirements | pip install -r requirements.txt |

| Download Checkpoints | huggingface-cli download --resume-download bytedance-research/UMO --local-dir models/bytedance-research/UMO |

Tips and Best Practices

- Prompt Format: Use description prompts instead of instruction-style prompts for better results.

- Resolution: Set image resolution between 768–1024 pixels for optimal output.

- Stability: UNO may produce unstable results with OmniContext due to prompt differences.

- Testing: Start with single-subject experiments before moving to multi-subject scenarios.

Frequently Asked Questions (FAQs)

Q1. What makes UMO different from UNO and USO?

UMO is specifically designed for multi-identity customization, whereas UNO and USO focus on single-identity optimization and universal subject optimization respectively.

Q2. Can UMO handle both synthetic and real images?

Yes, UMO is trained on a mixed dataset of synthetic and real-world images, which allows it to perform effectively in practical scenarios.

Q3. Is UMO free to use?

Absolutely. ByteDance has open-sourced the entire project, making it freely available for researchers and developers.

Q4. What are the hardware requirements for running UMO?

You need a GPU-enabled environment, preferably with CUDA support, and Python 3.11 or higher.

Q5. How do I fix unstable results with OmniContext?

Switch to description prompts and use a higher resolution between 768–1024 pixels to improve stability.

Conclusion

UMO by ByteDance marks a significant step forward in solving the multi-identity consistency problem in image customization. With its open-source nature, strong identity preservation, and robust multi-to-multi matching framework, UMO is poised to become an essential tool for researchers and developers.

Whether you are experimenting with group image generation or building a commercial application, UMO provides the resources and flexibility you need to achieve high-quality results while maintaining subject integrity.

By following the steps in this guide, you can install, run, and experiment with UMO in your own projects today.

Related Posts

Chroma 4B: Exploring End-to-End Virtual Human Dialogue Models

Chroma 4B: Exploring End-to-End Virtual Human Dialogue Models

Qwen3-TTS: Create Custom Voices from Text Descriptions Easily

Qwen3-TTS: Create Custom Voices from Text Descriptions Easily

How to Fix Google AI Studio Failed To Generate Content Permission Denied?

How to Fix Google AI Studio Failed To Generate Content Permission Denied?