Table Of Content

- What Is LLaDA 2.0 Mini (Preview)?

- Overview at a Glance

- Key Features of LLaDA 2.0 Mini

- System Setup I Used

- Install Prerequisites

- Download the Model

- Why a Diffusion Language Model?

- How Diffusion LLMs Generate Text

- Training: Forward Masking and Reconstruction

- Inference: Iterative Unmasking Rounds

- How It Differs from Autoregressive Decoding

- Architecture Snapshot

- Use Cases

- Running the Model: Basic Workflow

- Quick Test: Simple Prompt

- Multi-Round Refinement Prompt and VRAM Observation

- Performance Notes

- Practical Tips

- Step-by-Step Guide: Installation and First Run

- Tool Use and Tool Calling

- Tuning Refinement Rounds

- Limitations and Considerations

- Troubleshooting Checklist

- Frequently Asked Questions

- Is diffusion generation always better than autoregressive decoding?

- Can I fine-tune LLaDA 2.0 Mini?

- How many refinement rounds should I use?

- Summary Table: What Matters Most

- Conclusion

Run LLaDA 2.0 Mini: Install Guide + GPU Test

Table Of Content

- What Is LLaDA 2.0 Mini (Preview)?

- Overview at a Glance

- Key Features of LLaDA 2.0 Mini

- System Setup I Used

- Install Prerequisites

- Download the Model

- Why a Diffusion Language Model?

- How Diffusion LLMs Generate Text

- Training: Forward Masking and Reconstruction

- Inference: Iterative Unmasking Rounds

- How It Differs from Autoregressive Decoding

- Architecture Snapshot

- Use Cases

- Running the Model: Basic Workflow

- Quick Test: Simple Prompt

- Multi-Round Refinement Prompt and VRAM Observation

- Performance Notes

- Practical Tips

- Step-by-Step Guide: Installation and First Run

- Tool Use and Tool Calling

- Tuning Refinement Rounds

- Limitations and Considerations

- Troubleshooting Checklist

- Frequently Asked Questions

- Is diffusion generation always better than autoregressive decoding?

- Can I fine-tune LLaDA 2.0 Mini?

- How many refinement rounds should I use?

- Summary Table: What Matters Most

- Conclusion

LLaDA 2.0 Mini (Preview) is here. In this guide, I install it on Ubuntu, explain how it works, and run a quick GPU test to see memory use and behavior during iterative refinement. The model takes a different approach to text generation by using a diffusion-style process rather than strictly autoregressive decoding.

This release is an instruction-tuned diffusion language model built on a mixture-of-experts design. It targets practical tasks like chat, instruction following, tool use, and code generation, aiming for a favorable balance between computational cost and quality through selective expert activation.

If you want a compact model with strong instruction-following and parallel refinement during generation, LLaDA 2.0 Mini is worth a look.

What Is LLaDA 2.0 Mini (Preview)?

LLaDA 2.0 Mini is a diffusion language model (DLM) that reconstructs masked tokens in rounds instead of generating one token at a time from left to right. It is instruction-tuned, supports tool use and tool calling, and employs a mixture-of-experts (MoE) architecture to activate only a fraction of its parameters at inference.

The “Mini (Preview)” tag signals a compact variant. Total non-embedding parameters are about 16B, yet only around 1.44B parameters are active per step at inference due to its MoE design. This selective activation is central to its efficiency goals.

The model is optimized for real-world workloads such as chat agents, complex reasoning, and code synthesis, rather than focusing solely on pre-training benchmarks.

Overview at a Glance

| Item | Detail |

|---|---|

| Model | LLaDA 2.0 Mini (Preview) |

| Type | Instruction-tuned Diffusion Language Model (DLM) |

| Core Design | Mixture-of-Experts (MoE) |

| Total Parameters | ~16B (non-embedding) |

| Active Params at Inference | ~1.44B |

| Layers | 20 |

| Attention Heads | 16 |

| Context Length | 4K |

| Positional Encoding | RoPE |

| Vocabulary Size | ~158K tokens |

| Primary Tasks | Text generation, instruction following, chat agents, complex reasoning, code generation |

| Tool Use | Tool use and tool calling supported |

| Generation Method | Iterative masking and refinement (parallel token updates) |

| KV Cache | Not applicable (no AR-style cache speed-up) |

| Framework | Transformers-compatible |

| Test Platform | Ubuntu, NVIDIA RTX A6000 (48 GB VRAM) |

| Observed VRAM Peak | ~40 GB during 4 refinement rounds |

Key Features of LLaDA 2.0 Mini

- Diffusion-style text generation with iterative refinement rounds.

- Mixture-of-Experts architecture that activates only a subset of parameters per step.

- Instruction tuning for strong alignment with prompts and tasks.

- Tool use and tool calling support.

- 4K context window with RoPE and a large ~158K vocabulary.

- Parallel token updates during generation rather than strict left-to-right decoding.

System Setup I Used

I installed and ran LLaDA 2.0 Mini on Ubuntu with one NVIDIA RTX A6000 GPU (48 GB VRAM). The run included a basic prompt and a multi-round refinement prompt, while observing GPU memory use across refinement steps.

This setup gives a clear sense of VRAM requirements and runtime behavior when running diffusion-style inference with multiple refinement rounds on a single high-memory GPU.

Install Prerequisites

You’ll need Python with PyTorch and Transformers. A recent CUDA-enabled PyTorch build is recommended for GPU acceleration.

- Python environment ready (for example, via venv or conda).

- PyTorch installed with CUDA support.

- Transformers installed for model loading and text generation.

Step-by-step:

- Create and activate a Python environment.

- Install PyTorch with the correct CUDA build for your system.

- Install Transformers and related dependencies.

Download the Model

LLaDA 2.0 Mini is available on Hugging Face. You’ll need a read token.

Step-by-step:

- Run hf login and provide your token when prompted.

- Create a local directory for the model files.

- Use huggingface-cli to download the model into the directory you created.

Once downloaded, you can load the local path with Transformers and begin inference.

Why a Diffusion Language Model?

Traditional autoregressive (AR) models generate one token at a time using a causal mask. Diffusion language models instead learn to reconstruct masked tokens, allowing them to update many tokens in parallel during each refinement step.

This method enables an iterative process: the model proposes tokens, keeps high-confidence predictions, remasks low-confidence positions, and refines the sequence over several rounds. The approach can improve global consistency, as each round can consider both left and right context.

Because token updates happen in parallel rather than strictly sequentially, runtime behavior and optimization differ from AR decoding.

How Diffusion LLMs Generate Text

Training: Forward Masking and Reconstruction

During training, the model is given sentences where a fraction of tokens are randomly masked. The transformer learns to fill in all missing tokens at once. This forms a forward masking process and teaches the model to reconstruct coherent text from partial information.

The training objective is framed as a principled likelihood bound, maintaining a true generative modeling objective while using the mask-and-reconstruct paradigm.

Inference: Iterative Unmasking Rounds

At inference, generation proceeds in rounds. The model starts from a fully masked output of a specified length. Each round, it predicts candidates for all masked positions, keeps tokens with high confidence, remasks the uncertain ones, and repeats.

After a set number of refinement rounds, the output converges to a fully unmasked sequence. You can choose the number of rounds to balance speed and quality.

How It Differs from Autoregressive Decoding

- Context Access: AR models are causal and read left-to-right, while diffusion LLMs use bidirectional context during token updates.

- Parallel Updates: Diffusion models update many positions per step; AR models commit one token per step.

- KV Cache: AR decoding benefits from a KV cache for speed. Diffusion models do not use that cache style, so runtime characteristics are different and depend on the number of refinement rounds.

Architecture Snapshot

LLaDA 2.0 Mini combines the diffusion generation paradigm with a mixture-of-experts backbone. The MoE selects a subset of experts per token or step, activating around 1.44B parameters at inference out of a total of ~16B non-embedding parameters.

The model uses 20 layers and 16 attention heads, with RoPE for positional encoding and a 4K context window. The vocabulary spans about 158K tokens, accommodating broad text coverage and tool-related tokens for calling and integration.

This design focuses on practical downstream tasks and allows flexible control over speed-versus-quality through the number of refinement rounds.

Use Cases

LLaDA 2.0 Mini is intended for:

- Text generation and instruction following.

- Chat agents with multi-turn dialogue.

- Complex reasoning prompts that benefit from iterative refinement.

- Code generation and related developer prompts.

- Tool use and tool calling where structured responses and external function calls are needed.

Running the Model: Basic Workflow

The following is the general process I used to run the model with Transformers after downloading it locally.

- Load the tokenizer and model from the local path.

- Move the model to the GPU.

- Tokenize the prompt.

- Generate output with the model’s diffusion-style inference.

- Decode and print the result.

For a simple test, a short prompt can confirm that the model loads correctly and responds as expected. You can then try more structured prompts to observe iterative refinement.

Quick Test: Simple Prompt

I ran a minimal prompt to verify loading and generation speed. After tokenization and a short generation, the model responded promptly and produced a coherent output.

This confirmed the installation, local path loading, and GPU execution were set up correctly.

Multi-Round Refinement Prompt and VRAM Observation

To observe diffusion-style behavior, I used a structured prompt that instructs the model to:

- Start with an all-mask reply of exactly 32 tokens.

- Perform four refinement rounds.

- In each round, replace only the tokens with the highest confidence and keep others as [MASK].

- After round four, output the fully unmasked final answer.

The topic prompt asked for a two-sentence explanation of how transformers use attention. While this ran, I monitored GPU memory. VRAM climbed during the refinement steps and peaked around 40 GB on the RTX A6000 (48 GB). The model completed four rounds and returned a concise, correct explanation.

This test illustrates that memory use can rise across rounds, so plan VRAM headroom accordingly when choosing the number of refinement steps and output length.

Performance Notes

- Memory Use: On a 48 GB GPU, a four-round refinement with a 32-token masked sequence peaked around 40 GB VRAM in my run. Larger sequences or more rounds can increase memory use.

- Speed: The model returns initial outputs quickly and then proceeds through refinement. The total time reflects the chosen number of rounds and the size of the masked sequence.

- No KV Cache Acceleration: Since decoding is not strictly left-to-right, there is no autoregressive KV cache speed-up. Performance tuning centers on round count, batch size, and sequence length.

Practical Tips

- Start Small: Begin with shorter masked lengths and fewer rounds to gauge speed and memory behavior on your GPU.

- Scale Incrementally: Increase round count or sequence length step-by-step while monitoring VRAM.

- Use Local Paths: Downloading the model to a local directory and loading from disk helps with reliability and repeatability.

- Keep Dependencies Current: Use up-to-date PyTorch and Transformers to benefit from optimizations and bug fixes.

Step-by-Step Guide: Installation and First Run

Follow these steps to get up and running on a CUDA-enabled Ubuntu system.

- Prepare the environment

- Create a Python virtual environment.

- Install PyTorch with CUDA support appropriate for your GPU and driver.

- Install Transformers.

- Authenticate to Hugging Face

- Run hf login.

- Paste your read token when prompted.

- Download the model

- Create a local directory for LLaDA 2.0 Mini.

- Use huggingface-cli to download the model files into that directory.

- Load the model with Transformers

- Load tokenizer and model from the local path.

- Move the model to the GPU.

- Run a simple prompt

- Tokenize a short prompt.

- Generate output and decode it.

- Confirm that the model responds as expected.

- Try a refinement-style prompt

- Instruct the model to start with an all-mask output of a set length.

- Specify the number of refinement rounds.

- Observe VRAM use during the rounds.

- Inspect the final unmasked output.

Tool Use and Tool Calling

LLaDA 2.0 Mini supports tool use and tool calling, adding flexibility for practical applications. This allows prompts that instruct the model to call external tools or functions when needed, making it suitable for workflows that mix natural language reasoning with structured tool invocation.

For reliable tool interaction, keep prompts explicit about when and how to call tools, and design outputs that are easy to parse and verify.

Tuning Refinement Rounds

Refinement rounds control the speed-versus-quality trade-off during diffusion-style inference.

- Fewer rounds: Faster responses with less iterative polishing.

- More rounds: More opportunities to correct uncertain tokens and improve coherence.

Choose a round count that fits your latency and quality goals, then validate on your target tasks.

Limitations and Considerations

- Context Length: The 4K window is generous but not suited for very long documents or extended transcripts.

- Memory Footprint: VRAM can climb with longer outputs and more refinement rounds. Plan for headroom on GPUs with lower memory.

- Runtime Characteristics: Without a KV cache, scaling behavior differs from AR models. Monitor both latency and throughput on your workflows.

Troubleshooting Checklist

- Model won’t load on GPU: Confirm CUDA, PyTorch build, and drivers are aligned.

- Out-of-memory errors: Reduce sequence length, batch size, or number of refinement rounds.

- Slow responses: Lower the round count or shorten masked sequences; verify that computations are on GPU and not CPU-bound.

- Tokenization issues: Ensure the tokenizer matches the model and that you’re loading both from the same local directory.

Frequently Asked Questions

Is diffusion generation always better than autoregressive decoding?

No. Diffusion offers parallel token updates and iterative refinement, which can help in certain tasks. Autoregressive decoding benefits from KV cache acceleration and can be faster for long sequences. The right choice depends on your workload and constraints.

Can I fine-tune LLaDA 2.0 Mini?

This guide focuses on installation and inference. The model is instruction-tuned already. For fine-tuning, consult the model card and repository materials for supported methods and constraints.

How many refinement rounds should I use?

Start with a small number (for example, two to four) and adjust based on latency and quality. Observe VRAM use and adjust sequence length to stay within your GPU limits.

Summary Table: What Matters Most

| Area | What to Know |

|---|---|

| Generation Style | Iterative masking and refinement; parallel token updates |

| Architecture | Mixture-of-Experts; ~16B total params, ~1.44B active at inference |

| Context Window | 4K with RoPE |

| Tasks | Chat, instruction following, reasoning, code, tool use/calling |

| Performance | No AR-style KV cache; round count and sequence length drive runtime |

| Memory | VRAM rises with rounds and token length; plan headroom |

| Setup | Transformers-based; requires PyTorch + CUDA for GPU inference |

Conclusion

LLaDA 2.0 Mini (Preview) brings diffusion-style generation and mixture-of-experts efficiency to instruction-following workloads. It updates many tokens in parallel per round, refines uncertain positions, and supports tool use and tool calling for practical applications.

On a single RTX A6000, a four-round refinement over a 32-token masked sequence peaked around 40 GB VRAM and produced a clear, correct answer. If you want controllable round-based refinement, broad task coverage, and a compact active parameter footprint at inference, this model is a strong option to evaluate on your tasks.

Subscribe to our newsletter

Get the latest updates and articles directly in your inbox.

Related Posts

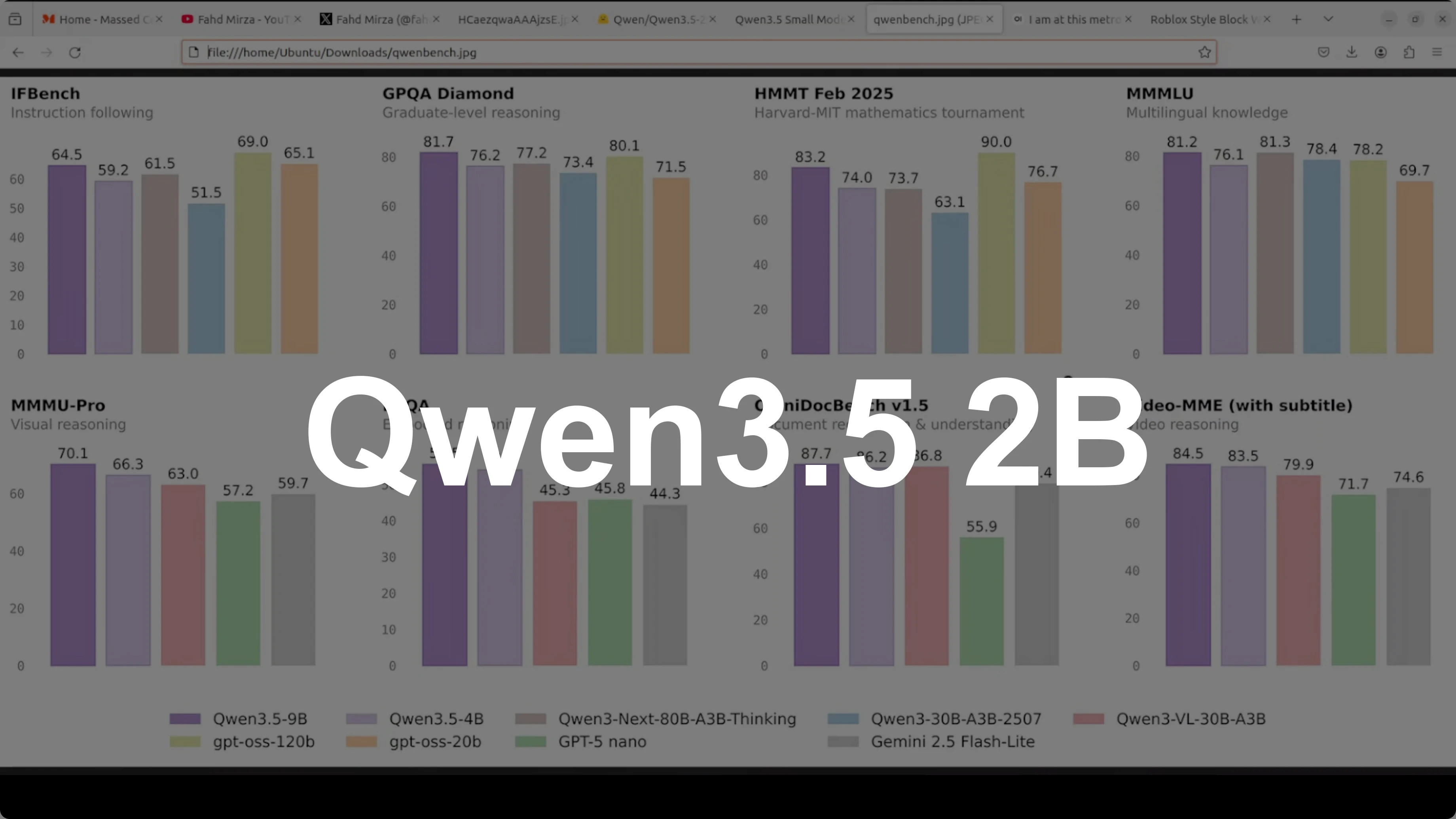

Qwen3.5 2B: The Smart, Cost-Free AI That Runs Anywhere

Qwen3.5 2B: The Smart, Cost-Free AI That Runs Anywhere

Qwen3.5 4B: Inside China’s Advanced Multimodal AI System

Qwen3.5 4B: Inside China’s Advanced Multimodal AI System

How to Access Terminal UI Instead of Web UI in OpenClaw

How to Access Terminal UI Instead of Web UI in OpenClaw